Computational Design Thinking: A review by Szymon Wojtyla

Transitions between traditional methods of architectural representation and computer-aided design often are topics of discussion in today’s world of architectural profession. Computation Design Thinking is a book, which provides a series of short texts, in which authors discuss the impact of computational thinking in architectural systems.

The overall topic of the book is to ask a question of what trajectory should the professional world follow in terms of utilising the computational power in architectural work. The comparison is drawn between computation and computerisation – which in the last few decades has been used interchangeably – often wrongly.

The use of computers and its theorisation originated far from the discipline of architecture, therefore theories which are discussed in this book have multiple points of origin including mathematics, physics, biology, as well as socio-economic disciplines.

Computerisation vs. Computation

Computerisation, by definition, is a process which uses a computer to carry out tasks, which originally were done by people – this is a very broad definition. To relate it better to architecture, we have to look at computerisation as an act of digitisation of information – this can be done through creation of various drawings, models, schedules – which are combined into coherent whole as part of the broader system.

Computation on the other hand, is an act of utilising computer’s calculation power to find multiple solutions to a question which has to be logic in nature. This has allowed for a shift of architect’s way of solving built environment related issues, by expanding set of tools available and therefore enabling more comprehensive problem solving in shorter amounts of time.

William J. Mitchel and Kostas Terzidis are giving us an understanding of computers as intelligent machines, questioning the role of authorship and computational design as capable of ‘deducing specificity’, a way of thinking which uses computers as multi-modal dynamic liberators of design. Achim Menges explains the difference between computation and computational – computation as a design methodology is to formulate the specific; computer-aided processes begin with the specific and with the object, computational processes on the other hand, start with elemental properties and generative rules to end with information which derives form as dynamic system.

Computation as Problem Solver

The book itself tackles the issue of systems on a much broader spectrum, than just architecture – yet always relates them back to systemic problem-solving tactics. It mentions pre-cursors of today’s thinking such as Darwin, D’Arcy Thomson and Goethe – which all found themselves studying natural forms in relation to their environment. This has led to invention of the discipline of Morphology as the rigorous study of form and its geometrical documentation.

The legacy for the relevance of computation in the realm of design is tracked by Mark Burry in his work, where he is deciphering the complex geometries for construction of Gaudi’s Sagrada Familia. He investigates the development of specific geometric and procedural methods driven by the critical nature of connecting computational form with material form. The challenge is identifying how particular mathematical means for generating geometry may not align with rules which relate to scalar issues of structure, materiality and assembly. In his second example, Burry showcases the linear connection of processes developed for extrapolating form from Gaudi’s underlying geometric principles. This already shows a distinction between computational processes and computation as being. Computation being is the realm in which processes are captured, where computation in itself implies operation – an act of coordination of the operators which allow for knowledge to be inherited into the process and specificity relevant to material and context to be realised. In this example, Burry identifies this as a capacity of the architect, who despite being an observer, is still integral in the act of coordination of the ‘observations’, which makes them a part of much larger system.

Creative Evolutionary Systems

Creativity in design is then being looked at as an evolutionary process, in which genotypic parameters serve as an exploratory engine in producing variety. Bentley and Corne describe an iterative process for finding of specific solutions from generalised information as the steps from genotype to embryogeny and phenotype of evaluation. Evaluation is an essential element in this process, which puts initial generative parameters through a specific set of procedures (also known as evolutionary algorithms) which are subjected to a recursive process of constant reconfiguration in search for the most optimal outcome. The outcome has to be often pre-determined in order for required characteristics of the element to occur within the final artifact, with the remaining outcomes being discarded.

Creative evolutionary systems are used to aid the creativity of users by helping them to explore ideas during evolution. Using human interaction enables the evolution of aesthetically pleasing solutions. Through removal of constraints we start searching in representation which allows the evolution to assemble new solutions. Using these methods turns evolution into an explorer of what is possible, instead of an optimiser of what is already there.

Emergence

The process in which interaction of simple conditions generate a complex phenomenon is called emergence. The result itself cannot be identified from initial characteristics of the elements analysed. The idea draws on John Holland’s concepts and techniques for genetic algorithms, which then expand upon computational mechanisms underlying emergent systems. He is the first person to use the word ‘model’ as it describes the collection of procedures and operators which interact to produce a set of possibilities. The key functions are described as ‘transition functions’, which is mapping of the possible states of the system that can arise from this function.

Fundamentally it assesses the capacity of simple agents to develop a complex behaviour, but only when engaged into a network. The conditions change, when the behaviour of the agent changes. The act of evaluation of emergence allows for containment of all possible states of the model, which can be evaluated under set of criteria established by an architect. Defining constrained generating procedures is to provide a setting in which we can study the complexities, and examples of emergence, that arise when rule-governed entities interact. It requires that a set of procedures is selected, which are described as ‘primitives’, from which everything else will be constructed.

Cellular Automata

The idea of emergence can be visualised by cellular automata. The simplest one has been constructed by John Conway, called ‘Life’. Despite its simplicity it shows fascinating examples of emergence. In this example, life is constructed from copies of single mechanism that has just two states, represented by a Boolean of 0 or 1. The lattice of connections is a square array, with each node having eight immediate neighbours. It then undergoes a series of transitions – if the cell is empty (state 0), and exactly three of the immediate neighbours are occupied (state 1), then in the next array that cells becomes occupied as well, otherwise it stays empty. If a cell is occupied, and either two or three immediate neighbours are occupied – then the cell remains occupied, otherwise it becomes empty.

This process can be used in architectural investigations, especially when patterns or reoccurrence is sought. This can be utilised in terms of elevation investigations, planar investigations and so on. This way a pattern of occupied cells can be designed which interact, therefore they can be seen as a general-purpose computer.

There are several rules that can be learned from emergence:

Rules (transition functions) that are simple can generate coherent, emergent phenomena;

Emergence centres on interactions are more than a summing of independent activities;

Persistent emergent phenomena can serve as components of more complex emergent phenomena.

Natural Model for Architecture

Another text brought in, is an extract from John Frazer’s seminal book ‘An Evolutionary Architecture’. It established a fundamental approach for how natural systems can unfold mechanisms for negotiating the complex design space inherent in architectural systems. Corelation and distinction is then drawn from natural processes, which then are emulated in the design processes and form an active manifestation within natural systems. The evolution of the form is seen as an environment in which genetic code interacts and generates new iterations. Frazer’s process establishes the realm in which computation must manoeuvre to produce a valid solution space, including the operations of self-organisation, complexity and emergent behaviour.

The design in this model is seen as emergence of form, which takes the thought, organisation and strategy as its baseline. It focuses on practising of the evolutionary paradigm, which creates architecture born of the relationship between dynamic environmental and socio-economic contexts, which then is realise through morphogenetic materialisation.

It is worth noting that the process required a clear distinction between sources of inspiration and sources of explanation. Natural science used for explanation or illustration requires a check whether the anthology is valid. It is important to differentiate between the nature of different kind of theories. According to Lionel March “Logic has interests in abstract form. Science investigates extant forms. Design initiates novel forms. A scientific is not the same thing as a design hypothesis. A logical proposition is not to be mistaken for a design proposal.” This draws a conclusion, that in this context it is a theory of generation.

Morphogenesis

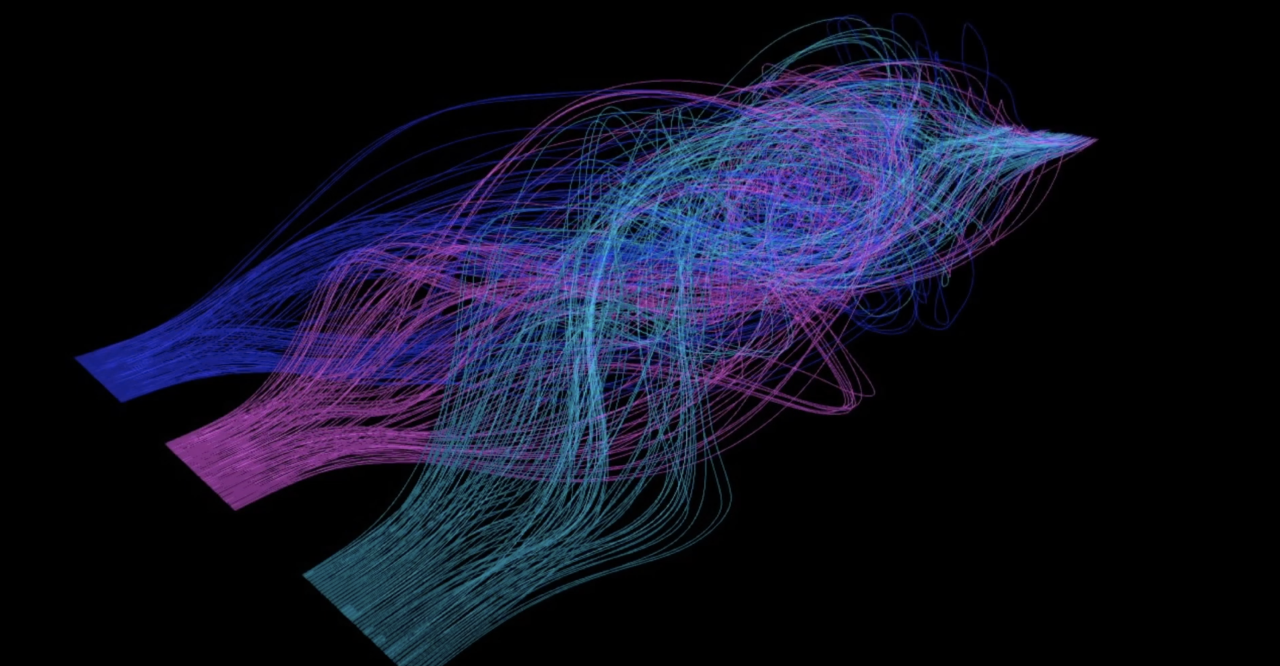

According to Michael Weinstock, concepts and techniques from the field of mathematics and biology present an approach where emergence, within the computational environment, is a valuable and necessary component for the generation of robust architectural systems. He lays out the principles of organisation, differentiation and interaction of natural systems, along with their correlating mechanisms in mathematics, cybernetics and systems theory to propose a computationally based paradigm for design. He characterises pattern as the most essential.

The process which enables shape generation is referred to as morphogenesis. In nature that process is directed by the qualities of the cell itself, which are coded within the genetic code. Patterns is described as an image of growth. It draws on the idea of its dynamicity – architecture, which is based on the natural systems, should have dynamic systems embedded within it. The development of the form offers general theory of the morphogenesis. In this theory the nodes are generated locally, rather than globally, and are based on set of local rules of lattice nodes.

Conclusion

Computational Design Thinking asks the most fundamental question, which is – is architecture a system; and if so, is an architect a person who coordinates systems, which complexity depends on the scale of the work undertaken.

The unique capabilities of human brain must not be forgotten. It has the ability to make extraordinary guesses based on the experience, not to mention retrieving memory without the need for extensive searches. These aspects of intuition, perception and imagination are the traditional engines for architectural design. The human input is required as the first step in formulation of the concept. The prototyping, modelling, testing, evaluation and evolution all use the power of the computer, but the initial spark comes from human creativity.